折腾记-讲一下iptables的一个小问题

很早之前就有用过iptables,那时候是早期版本的Ubuntu系统,配置防火墙的时候,是挺复杂的,就是在reject之前添加accept规则,并且当时很多网上资料都是在后续添加就行,这个其实在当时是有问题的,所以比较印象深刻。

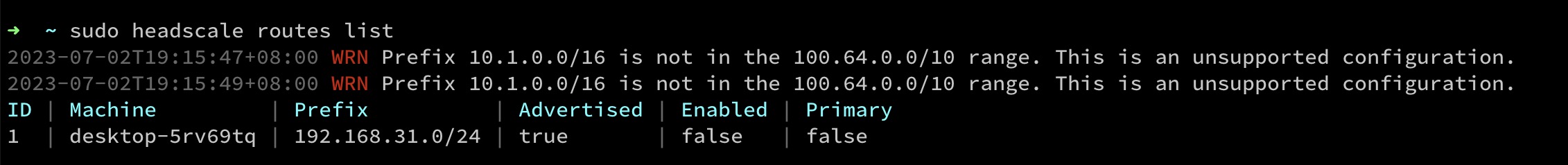

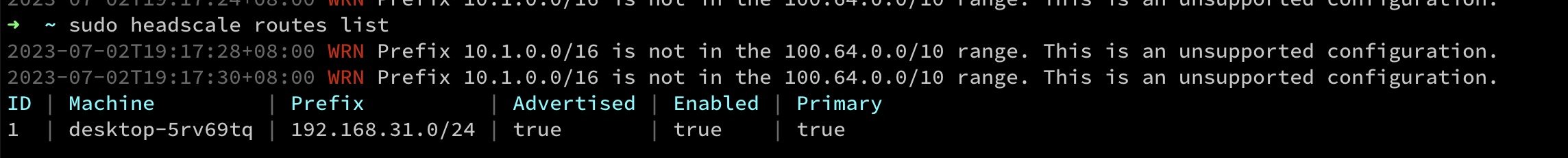

这次碰到的问题是对iptables的概念不熟悉有关,因为iptables的逻辑其实也有一定问题我觉得,比如最基本的命令,iptables -L

这里help显示的是会展示所有chain的rules

但是这样完全把table概念给忽略了,如果不注明理论上应该把所有table的展示出来,或者把包含这个参数并且是默认给出filter这个table的写清楚

所以这次要补一下这部分概念

iptables里首先就是有四种table,分别是 Filter, NAT, Mangle, Raw四种内建表

并且层次结构是 iptables -> Tables -> Chains -> Rules.

filter:

This is the default table (if no -t option is passed). It contains the built-in chains INPUT (for packets destined to local sockets), FORWARD (for packets being routed through the box), and OUTPUT (for locally-generated packets).

包含 INPUT,FORWORD和OUPUT三个chain,默认就是展示这个,所以就会导致用iptables -L 显示不了mangle table的规则

nat:

This table is consulted when a packet that creates a new connection is encountered. It consists of three built-ins: PREROUTING (for altering packets as soon as they come in), OUTPUT (for altering locally-generated packets before routing), and POSTROUTING (for altering packets as they are about to go out).

nat包含 PREROUTING,OUTPUT,POSTROUTING三个chain

mangle:

This table is used for specialized packet alteration. Until kernel 2.4.17 it had two built-in chains: PREROUTING (for altering incoming packets before routing) and OUTPUT (for altering locally-generated packets before routing). Since kernel 2.4.18, three other built-in chains are also supported: INPUT (for packets coming into the box itself), FORWARD (for altering packets being routed through the box), and POSTROUTING (for altering packets as they are about to go out).

mangle主要用于专门的数据包更改,包含 PREROUTING,INPUT,OUTPUT,FORWARD,POSTROUTING 五个chain,注意有内核版本区别,只是现在基本没那么老的内核了

raw:

This table is used mainly for configuring exemptions from connection tracking in combination with the NOTRACK target. It registers at the netfilter hooks with higher priority and is thus called before ip_conntrack, or any other IP tables. It provides the following built-in chains: PREROUTING (for packets arriving via any network interface) OUTPUT (for packets generated by local processes)

包含 PREROUTING和OUTPUT,主要用来配置例外

chain内部会有实际的rule规则,这个具体后面可以介绍