使用milvus和towhee实现简陋版的以图搜图

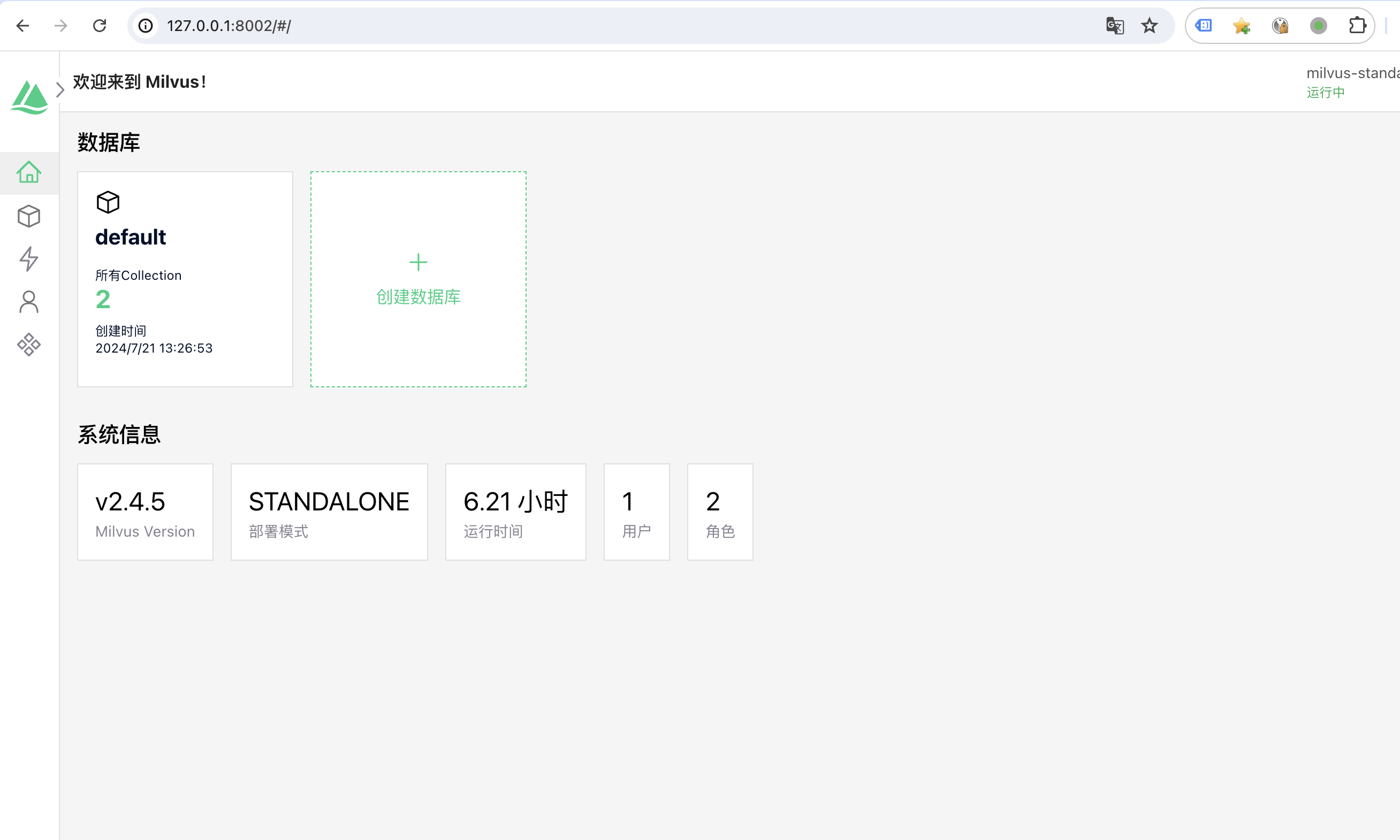

上次我们尝试用 towhee 跟 milvus 实现了图片的向量化,那么顺势我们就能在这个基础上实现以图搜图

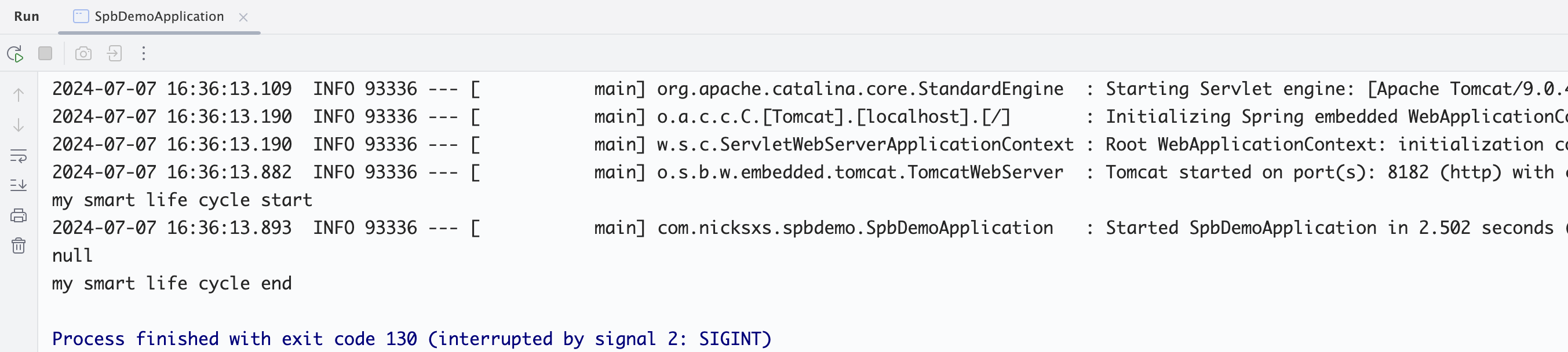

首先我们找一些图片,

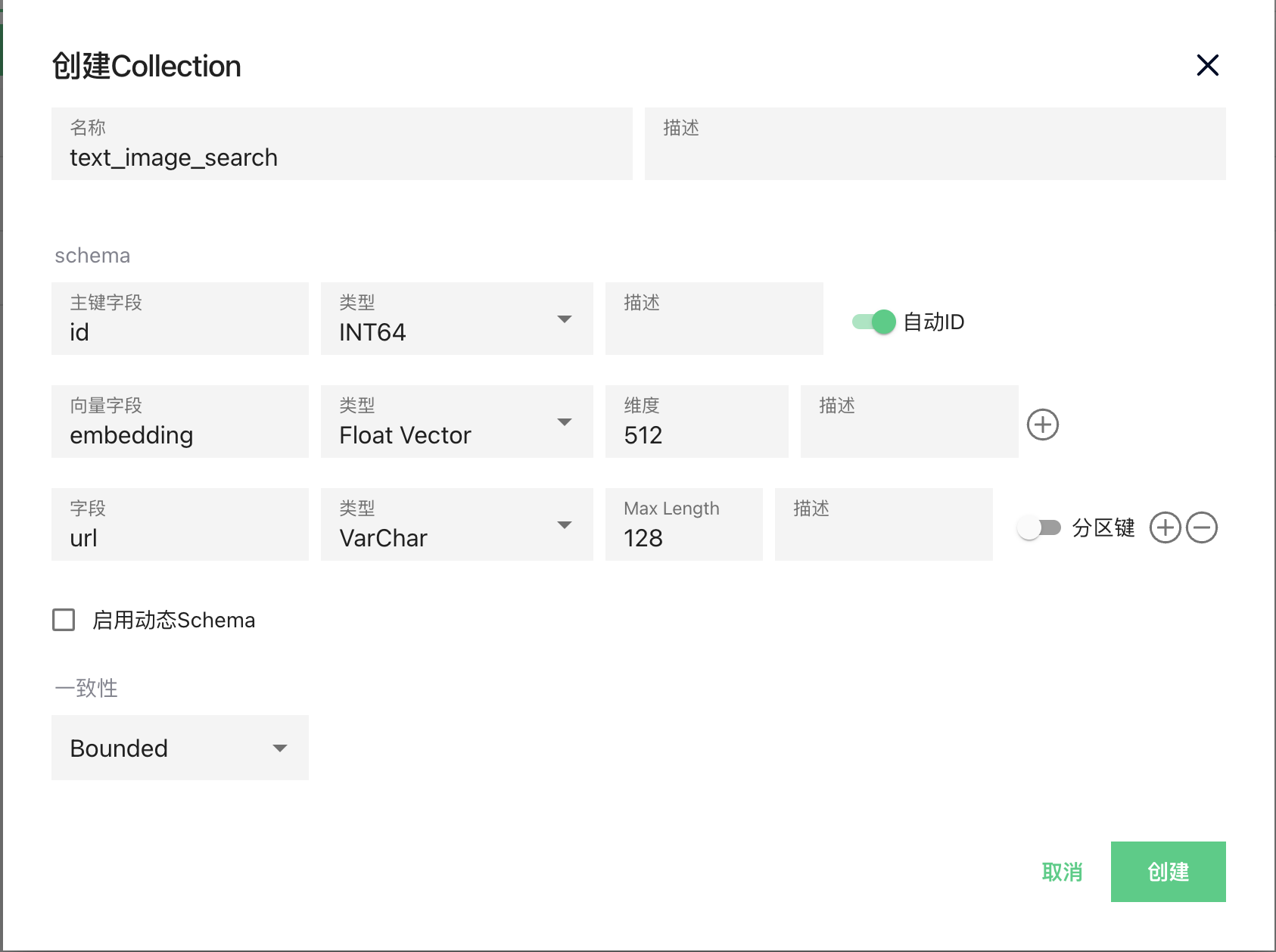

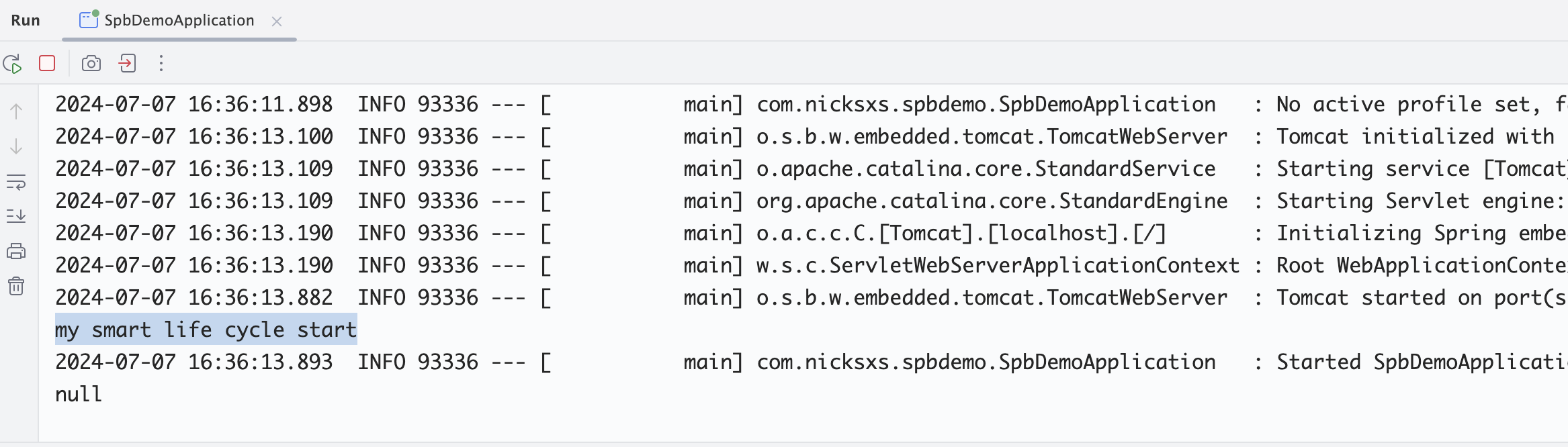

然后我们先把他们都向量化,存储在milvus里1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24from towhee import AutoPipes, AutoConfig, ops

import towhee

import os

from pymilvus import MilvusClient

import json

# 1. 设置一个Milvus客户端

client = MilvusClient(

uri="http://localhost:19530"

)

insert_conf = AutoConfig.load_config('insert_milvus')

insert_conf.collection_name = 'text_image_search'

insert_pipe = AutoPipes.pipeline('insert_milvus', insert_conf)

# 创建图像嵌入管道

image_embedding_pipe = AutoPipes.pipeline('image-embedding')

files = os.listdir("./images")

for file in files:

file_path = os.path.join("./images", file)

if os.path.isfile(file_path):

embedding = image_embedding_pipe(file_path).get()[0]

insert_pipe([file_path, embedding])

然后我们找一张神仙姐姐的其他图片,先把它 embedding 后在 milvus 里进行向量检索1

2

3

4

5

6

7

8

9

10

11

12

13image_embedding_pipe = AutoPipes.pipeline('image-embedding')

# 生成嵌入

embedding = image_embedding_pipe('./to_search.jpg').get()[0]

print(embedding)

res = client.search(

collection_name="text_image_search",

data=[embedding],

limit=5,

search_params={"metric_type": "IP", "params": {}}

)

result = json.dumps(res, indent=4)

print(result)

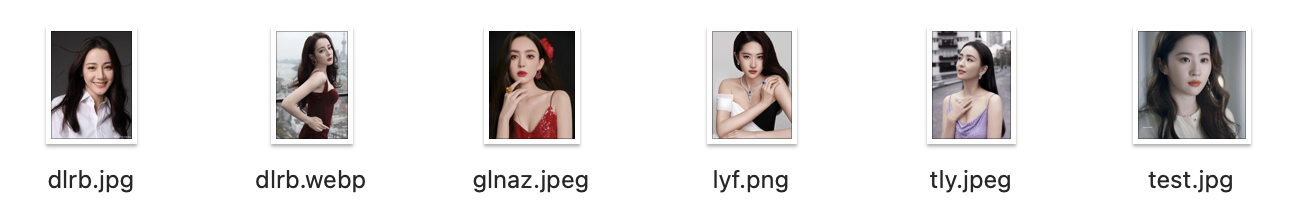

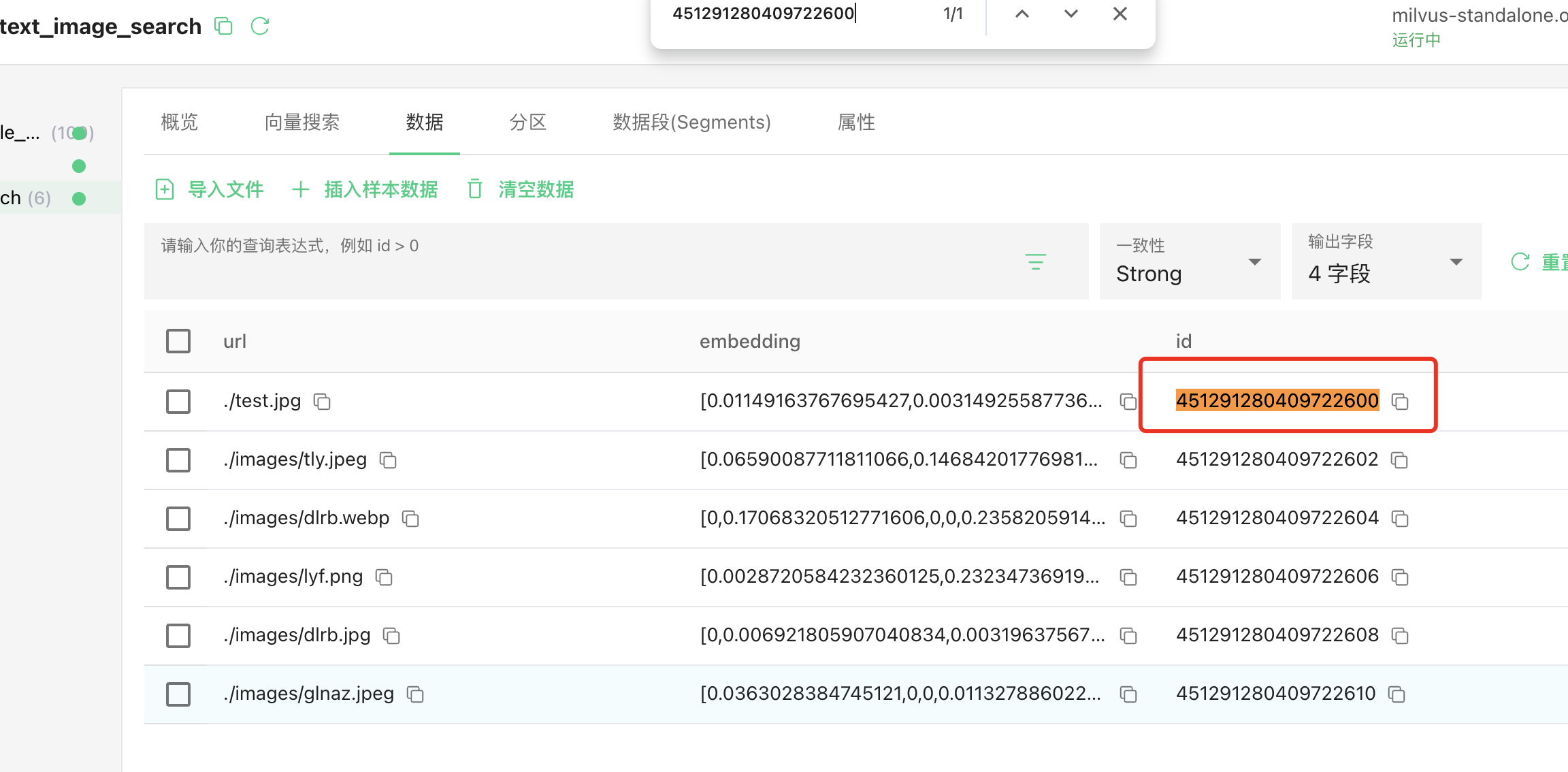

我们检索出来5个结果,

可以通过distance找到距离最近的这个是id=451291280409722600

可以发现也是神仙姐姐的,只是作为参考

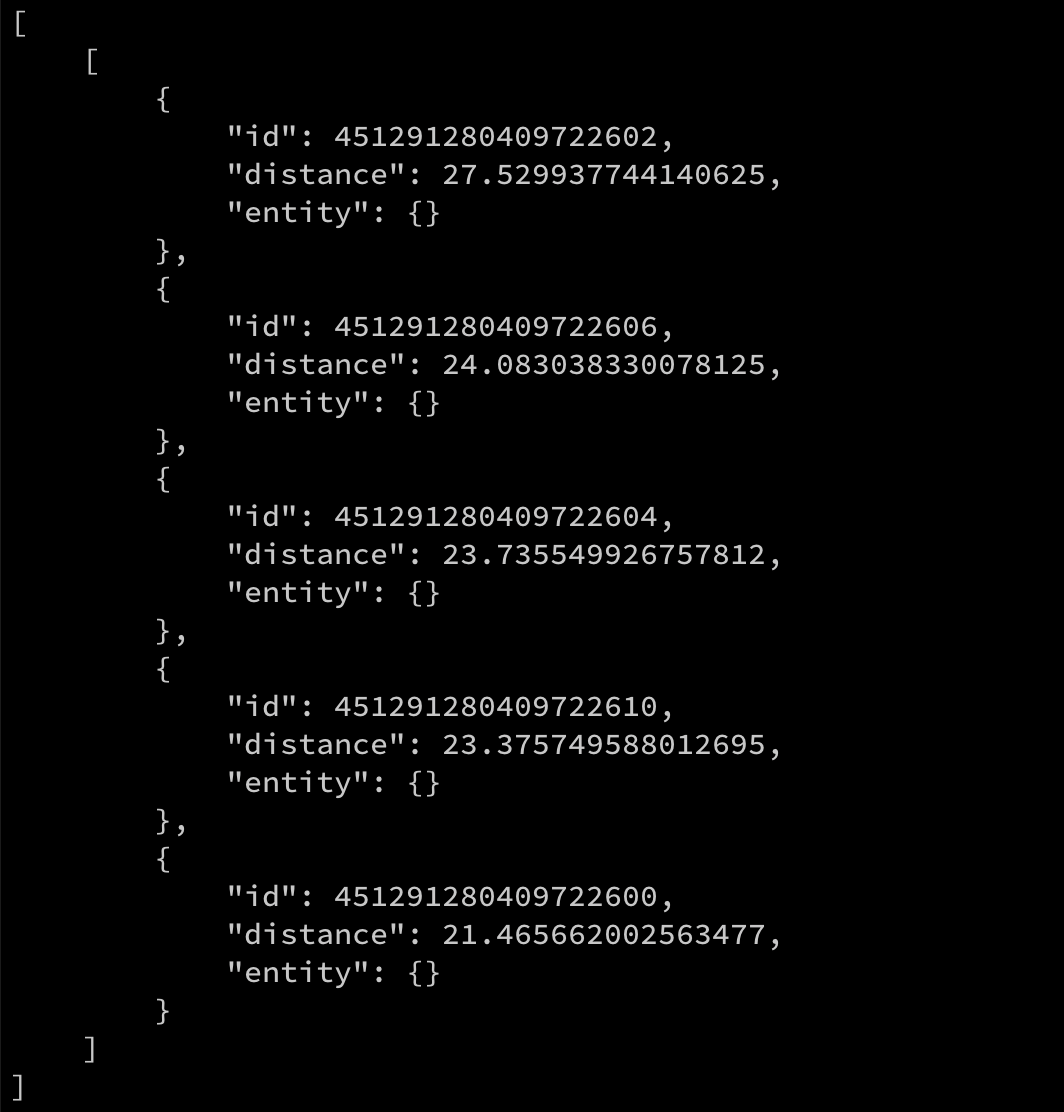

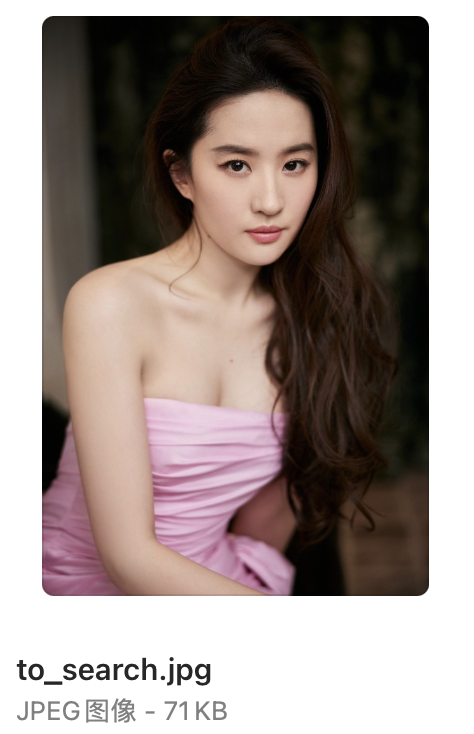

to_search 目标图片是

搜索的最短距离就是id对应的图片

这张图片就是神仙姐姐